The Tooling Bottleneck: An Overview of the AI/MCP Tool Overload Problem

1. Introduction: The Paradox of Capability

The operational paradigm for agentic AI is centered on the model's ability to select and utilize a suite of external tools—from calendar management and email clients to complex database queries and web search APIs. The objective is to create a versatile assistant capable of executing multi-step, real-world tasks. The logical, yet flawed, assumption has been that a larger toolset equates to a more powerful agent.

In practice, a critical scaling issue has emerged. As the number of available tools increases, the performance of the underlying Large Language Model (LLM) does not scale linearly. Instead, it enters a state of degradation characterized by reduced accuracy in tool selection, increased task failure rates, and higher operational costs. This “tool overload” is not a peripheral issue but a fundamental challenge to the current approach of building agentic systems. As one developer on Hacker News articulated in a discussion about the Model Context Protocol (MCP), a standard for tool integration, the problem is foundational: “My point is that adding more and more and more tools doesn't scale and doesn't work. It only works when you have a few tools. If you have 50 MCP servers enabled, your requests are probably degraded.” (Source).

This phenomenon is creating a tangible sense of frustration. Developers and even casual users report feeling overwhelmed, with one Reddit user describing their experience with multiple AI tools as "chaotic" and losing track of "what tool I used for what." (

). This article will deconstruct this problem from a technical standpoint, supported by evidence from academic research and developer forums, to formalize the tooling bottleneck as a primary obstacle for the future of AI.2. Technical Underpinnings of the Tool Overload Problem

The tool overload problem stems from the architectural limitations of contemporary LLMs, primarily related to their finite context windows and attention mechanisms. While context windows have expanded dramatically, the methods for utilizing that space have not kept pace, leading to significant performance trade-offs.

2.1. Context Window Bloat and Reasoning Degradation

An LLM's ability to use a tool is contingent on that tool's definition being present within its context window—the model's effective short-term memory. This definition includes the tool's name, a natural-language description of its function, and its parameters. As more tools are added, the aggregate size of these definitions, or “token bloat,” consumes an increasingly large portion of the context window.

This has two negative consequences. First, it directly increases the computational cost and latency of every request. As research from Meibel AI demonstrates, there is a direct correlation between the number of input tokens and the time it takes to generate an output token. (Source). Second, and more critically, it crowds out the space available for the actual task-specific context. This includes the user's instructions, the history of the conversation, and the intermediate “thoughts” the model needs to reason through a problem. As Sean Blanchfield notes in his analysis, “The MCP Tool Trap,” this forces a compromise between providing detailed tool descriptions (for accuracy) and leaving sufficient space for the model's reasoning process.

2.2. Diminished Accuracy and the "Lost in the Middle" Problem

When an LLM is presented with an extensive set of tools, its ability to select the correct one for a given task deteriorates. The model's “attention” mechanism must evaluate a larger set of possibilities, which increases the probability of error. This is compounded by the "lost in the middle" phenomenon, where models show better performance recalling information placed at the beginning or end of the context window, while information in the middle is often ignored or misremembered.

This can manifest as:

- Incorrect Tool Selection: Choosing a tool that is functionally inappropriate for the task.

- Parameter Hallucination: Invoking the correct tool but with invented or incorrect parameters.

- Tool Interference: Descriptions of similarly-named or functionally-overlapping tools can “confuse” the model, leading to unpredictable behavior.

This phenomenon is empirically supported. The research paper “Less is More: On the Selection of Tools for Large Language Models” demonstrates a clear negative correlation between the number of available tools and the accuracy of tool-calling. A developer on the r/AI_Agents subreddit corroborates this from a practical standpoint: “[once an agent has to acces to 5+ tools... the accuracy drops. Chaining multiple tool calls becomes unreliable.]” (

).

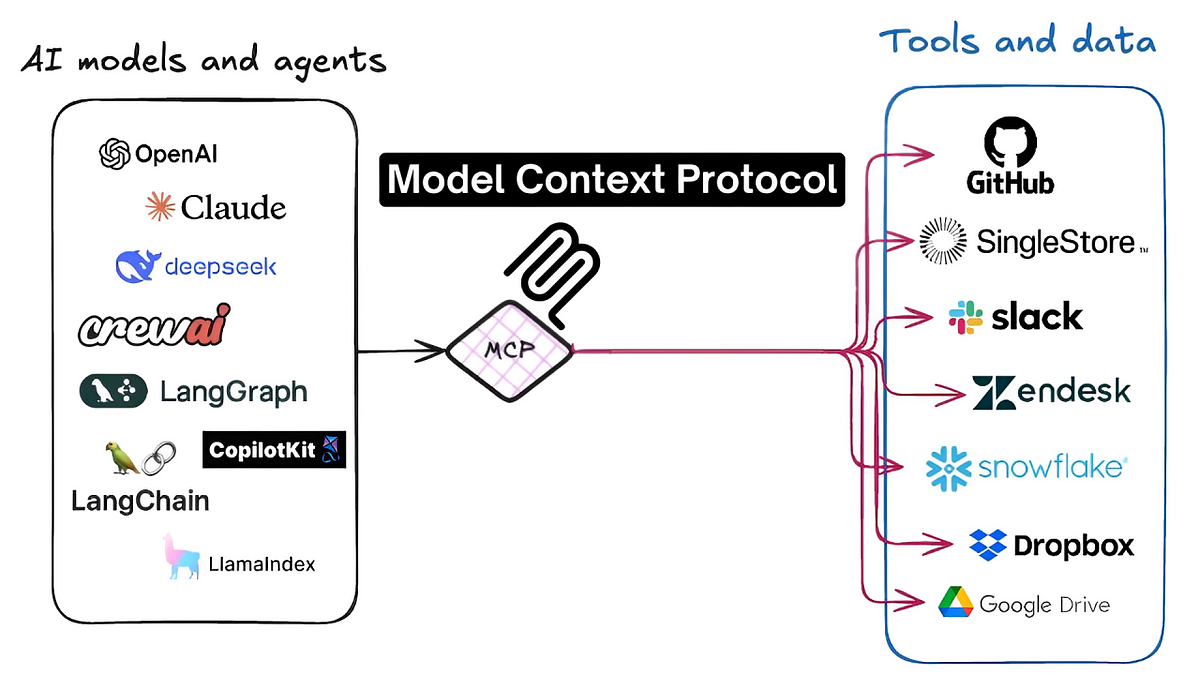

3. Case Study: The Model Context Protocol (MCP) Ecosystem

The tool overload problem is particularly acute within the MCP ecosystem. MCP is a standardized protocol designed to facilitate seamless interaction between AI agents and thousands of third-party tools (MCP servers). While this standard has catalyzed innovation, it has also become a focal point for the scaling issue.

The very design of MCP, which relies on discoverable, natural-language tool definitions, directly exposes agents to the context window bloat and attention deficit problems. The temptation for users and developers is to enable a large number of MCP servers to maximize an agent's potential capabilities. However, as a Hacker News commenter powerfully stated, this approach is fundamentally flawed:

“MCP does not scale. It cannot scale beyond a certain threshold. It is Impossible to add an unlimited number of tools to your agents context without negatively impacting the capability of your agent. This is a fundamental limitation with the entire concept of MCP... You will see posts like “MCP used to be good but now…” as people experience the effects of having many MCP servers enabled. They interfere with each other.” (Source)

This highlights that the problem is not merely an implementation detail but an architectural challenge inherent to the current “load everything” approach. Another discussion pointed out that the bottleneck is the models themselves, which “struggle when you give them too many tools to call. They're poor at assessing the correct tool to use when given tools with overlapping functionality or similar function name/args.” (Source). The consensus in these technical communities is that without a solution, the promise of a vast, interconnected tool ecosystem via MCP will remain unfulfilled, limited by the cognitive capacity of the very models it seeks to empower.

4. Proposed Solution Architectures

The industry is converging on two primary approaches to mitigate the tool overload problem. These solutions aim to move away from the naive method of loading all available tools into the context window for every task, a practice that has proven inefficient and error-prone.

4.1. Server-Side Solutions: Tool Abstraction and Hierarchies

This approach focuses on making the tool servers themselves more intelligent. Instead of exposing a large number of granular, low-level tools to the agent, a server-side solution can abstract them into higher-level, composite capabilities. This reduces the number of choices the LLM has to make at any given time.

An example of this is the “strata” concept proposed by Klavis AI. According to their API documentation, this system allows for the dynamic creation of tool hierarchies. This could enable an agent to first select a broad category (e.g., “file management”) and only then be presented with a smaller, more relevant subset of tools (e.g., “create_file,” “update_file”), thus reducing the cognitive load on the model at each step.

4.2. Client-Side Solutions: Dynamic Tool Selection and Filtering

This approach places the intelligence within the client application that orchestrates the AI agent. The core idea is to implement a pre-processing or routing layer that analyzes the user's intent before engaging the primary LLM. This layer is responsible for dynamically selecting a small, highly relevant subset of tools from a much larger library and injecting only those into the context window for the specific task at hand.

An example of this is the approach taken by Jenova, which uses an intermediary system that intelligently filters and ranks available tools based on the user's natural language request. As detailed in the analysis found in “The Tooling Bottleneck,” this method creates a “just-in-time” toolset for the LLM. By doing so, it keeps the context window lean and focused, thereby preserving the model's reasoning capacity and dramatically improving tool selection accuracy. This aligns with insights from Memgraph, which argues that the key is "feeding LLMs the right context, at the right time, in a structured way," rather than simply building bigger models. (Source).

5. Conclusion: Towards Scalable Agentic Architectures

The tool overload problem represents a significant and fundamental bottleneck to the advancement of capable, general-purpose AI agents. The initial strategy of maximizing tool quantity has proven to be a fallacy, leading to degraded performance, reduced reliability, and a poor user experience. The evidence from both academic research and extensive developer discussion indicates a clear consensus: raw scaling of tool inputs is an architectural dead end.

As a McKinsey report on agentic AI notes, scaling requires a new "agentic AI mesh"—a modular and resilient architecture—to manage mounting technical debt and new classes of risk. (Source). The path forward lies not in limiting the number of available tools, but in developing more sophisticated architectures for managing them. The future of agentic AI will depend on systems that can intelligently and dynamically manage context. Whether through server-side abstraction or client-side dynamic filtering, the next generation of AI agents must be able to navigate a vast ocean of potential tools with precision and focus. Overcoming this tooling bottleneck is a necessary and critical step in the evolution from functionally limited AI to truly scalable and intelligent agentic systems.