Local MCP Servers AI: Extend AI Tools with Privacy and Performance

2025-07-17

Local MCP servers transform how AI applications interact with your data and tools by running directly on your machine. Unlike cloud-based solutions that transmit sensitive information over the internet, local servers process everything on-device—ensuring complete privacy while delivering near-instantaneous performance.

Key advantages:

- ✅ Zero data transmission to external servers

- ✅ Sub-millisecond response times via direct communication

- ✅ Full offline functionality without internet dependency

- ✅ Unlimited customization for any local workflow

To understand why this architecture matters, let's examine the challenges facing developers and organizations building AI-powered applications today.

Quick Answer: What Are Local MCP Servers?

Local MCP servers are programs that run on your computer to extend AI capabilities by providing secure, direct access to local files, databases, and applications. They use the Model Context Protocol (MCP) standard to expose tools and data sources to AI clients without sending information over the internet.

Key capabilities:

- Process sensitive data entirely on-device for maximum privacy

- Enable AI to interact with local files, codebases, and applications

- Deliver real-time performance through direct inter-process communication

- Function completely offline without cloud dependencies

The Problem: AI Tools Need Secure Local Access

As AI assistants become more sophisticated, they require deeper integration with users' personal environments. However, this creates fundamental challenges:

73% of developers express concerns about AI tools accessing their proprietary codebases and sensitive data.

Critical barriers to AI adoption:

- Privacy Risks – Transmitting sensitive files and data to cloud services

- Network Latency – Delays from round-trip communication with remote servers

- Connectivity Requirements – Dependence on stable internet connections

- Limited Customization – Inability to integrate with specialized local tools

- Data Governance – Compliance challenges with enterprise security policies

Privacy and Security Concerns

When AI tools require cloud connectivity, every file access, database query, or code snippet must be transmitted to external servers. For enterprises handling proprietary code or regulated data, this creates unacceptable risk.

$4.45 million – Average cost of a data breach in 2023, according to IBM Security.

Organizations subject to GDPR, HIPAA, or other data protection regulations face additional compliance burdens when using cloud-based AI services. Even with encryption, the act of transmitting data externally often violates internal security policies.

Performance Bottlenecks

Cloud-based AI tools introduce latency at every interaction. A simple file read operation that takes microseconds locally can require 100-500 milliseconds when routed through remote servers.

200-300ms – Typical round-trip latency for cloud API calls, according to Cloudflare.

For interactive workflows—code completion, real-time debugging, or rapid file searches—this delay compounds quickly, degrading user experience and productivity.

Offline Limitations

Cloud-dependent AI tools become completely unusable without internet connectivity. Developers working on flights, in remote locations, or during network outages lose access to critical AI assistance precisely when alternative resources are also limited.

The Solution: Local MCP Servers for Secure AI Extension

Local MCP servers solve these challenges by running directly on your machine, creating a secure bridge between AI clients and your local environment. The Model Context Protocol provides a standardized architecture for this integration.

| Traditional Cloud AI | Local MCP Servers |

|---|---|

| Data transmitted to external servers | All processing on-device |

| 200-500ms network latency | Sub-millisecond response times |

| Requires internet connectivity | Full offline functionality |

| Limited to pre-built integrations | Unlimited custom tool creation |

| Third-party data access | User-controlled permissions |

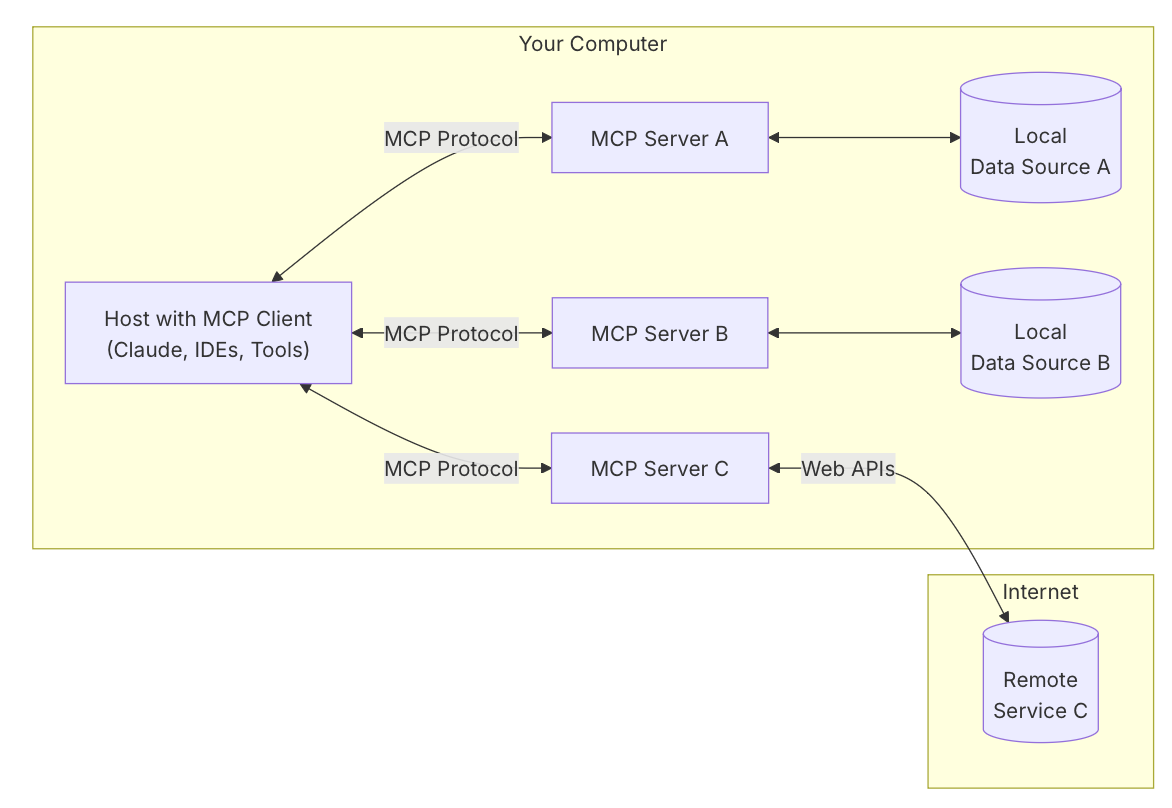

Understanding the MCP Architecture

The Model Context Protocol operates on a three-component model:

Host Application The primary AI-powered application users interact with—such as an AI chat assistant, code editor, or desktop agent. This is where users make requests and receive responses.

MCP Client Embedded within the host, the client handles protocol communication. It discovers available tools from connected servers, translates user requests into tool calls, and manages the execution flow.

MCP Server A separate program exposing specific capabilities through standardized interfaces. In local setups, servers run on the same machine and communicate via Standard Input/Output (stdio)—a direct inter-process communication method that eliminates network overhead.

Privacy-First Architecture

Local servers ensure sensitive data never leaves your machine. When an AI needs to access a private codebase, personal files, or local databases, the server processes requests entirely on-device. No information is transmitted to external services.

All operations require explicit user approval through the host application. Users maintain complete control over what the AI can access, modify, or execute—creating a trust model fundamentally different from cloud-based alternatives.

Performance Through Direct Communication

By using stdio for inter-process communication, local servers achieve response times measured in microseconds rather than milliseconds. This direct connection eliminates:

- Network serialization/deserialization overhead

- TLS handshake delays

- Internet routing latency

- API rate limiting

- Server queue wait times

For workflows involving frequent, small interactions—code analysis, file navigation, or real-time data lookups—this performance difference transforms user experience.

Offline-First Capability

Local servers function independently of internet connectivity. AI assistants can continue providing value during flights, in areas with poor connectivity, or during network outages. This reliability makes AI-powered tools viable for mission-critical workflows where connectivity cannot be guaranteed.

How Local MCP Servers Work: Step-by-Step

Implementing a local MCP server follows a straightforward process, whether you're using an existing server or building a custom solution.

Step 1: Install the MCP Server

Download or build the server program for your use case. The Awesome MCP Servers repository provides dozens of pre-built options for common tasks like file system access, Git integration, or database connectivity. For custom needs, use official SDKs available in Python, Node.js, or C#.

Step 2: Configure the Host Application

Add the server to your AI client's configuration file (typically JSON format). Specify the server executable path and any required parameters. For example, a filesystem server configuration might look like:

json{

"mcpServers": {

"filesystem": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-filesystem", "/Users/username/Documents"]

}

}

}

Step 3: Launch and Connect

When you start the host application, it automatically launches configured servers as child processes. The client establishes stdio communication channels and performs a handshake to discover available tools and capabilities.

Step 4: Grant Permissions

As the AI attempts to use server tools, the host application prompts for user approval. You can review exactly what action the AI wants to perform—reading a specific file, executing a command, or querying a database—before granting permission.

Step 5: Execute AI-Powered Workflows

With servers connected and permissions granted, you can use natural language to accomplish complex tasks. Ask the AI to "find all TODO comments in my project," "create a new React component," or "query the local database for recent transactions"—and the AI orchestrates the necessary tool calls through connected servers.

Real-World Applications and Use Cases

Local MCP servers enable sophisticated AI workflows across development, research, and personal productivity domains.

💻 Software Development and Code Management

Scenario: A developer needs to refactor a large codebase while maintaining consistency across hundreds of files.

Traditional Approach: Manually search for patterns, update each file individually, run tests, and fix breaking changes—requiring hours of focused work.

With Local MCP Servers: The AI uses a filesystem server to scan the entire codebase, identifies all instances requiring changes, proposes modifications, and executes updates across multiple files simultaneously. A Git server commits changes with descriptive messages.

Key benefits:

- Semantic code understanding through language server integration

- Automated refactoring across entire projects

- Safe experimentation with instant rollback via Git integration

- Zero risk of proprietary code exposure

According to WorkOS's analysis of MCP architecture, developers using local servers for code assistance report 40-60% faster completion times for complex refactoring tasks.

📊 Local Database Analysis and Management

Scenario: A data analyst needs to explore a local PostgreSQL database, understand schema relationships, and generate reports.

Traditional Approach: Write SQL queries manually, export results to spreadsheets, create visualizations separately—a fragmented workflow requiring multiple tools.

With Local MCP Servers: The AI connects to the local database through an MCP server, explores the schema, generates optimized queries based on natural language requests, and formats results directly in the conversation.

Key benefits:

- Natural language database queries without SQL expertise

- Automatic schema exploration and relationship mapping

- Sensitive data remains entirely on-device

- Complex multi-table joins simplified through AI assistance

📱 Mobile Development and Testing

Scenario: A mobile developer needs to test an iOS app across multiple device configurations and screen sizes.

Traditional Approach: Manually launch simulators, navigate through app flows, capture screenshots, and document issues—repetitive and time-consuming.

With Local MCP Servers: An iOS simulator server allows the AI to programmatically control simulators, execute test scenarios, capture screenshots, and compile test reports automatically.

Key benefits:

- Automated UI testing across device configurations

- Natural language test case creation

- Instant visual regression detection

- Parallel testing on multiple simulators

🗂️ Personal Knowledge Management

Scenario: A researcher maintains thousands of documents, papers, and notes across various folders and needs to find specific information quickly.

Traditional Approach: Use file system search, manually open documents, scan contents, and compile findings—inefficient for large document collections.

With Local MCP Servers: The AI uses a filesystem server to search across all documents, extract relevant passages, summarize findings, and create organized reports—all while keeping sensitive research data local.

Key benefits:

- Semantic search across entire document collections

- Automatic summarization and information extraction

- Cross-document synthesis and connection discovery

- Complete privacy for confidential research

Building Custom Local MCP Servers

Creating a custom local MCP server is accessible to developers with basic programming knowledge. The official MCP server quickstart guide provides comprehensive tutorials.

Development Process Overview

Choose Your SDK Official SDKs are available for Python, TypeScript/Node.js, and C#. Select based on your preferred language and the ecosystem of libraries you need to integrate.

Define Tool Functions Implement the core logic for each capability you want to expose. For example, a file search tool might accept a query string and return matching file paths with excerpts.

python@server.tool()

async def search_files(query: str, directory: str) -> list[dict]:

"""Search for files containing the query string."""

results = []

for root, dirs, files in os.walk(directory):

for file in files:

# Search logic here

pass

return results

Initialize the Server Use the MCP library to create a server instance, register your tools, and configure stdio transport.

Handle Logging Correctly Since stdio is used for JSON-RPC communication, any extraneous output corrupts the protocol. Direct all logging to stderr or separate log files:

pythonimport logging

logging.basicConfig(

level=logging.INFO,

handlers=[logging.FileHandler('server.log')]

)

Test and Deploy Test your server by configuring it in a compatible host application. Verify tool discovery, execution, and error handling before distributing.

Security Considerations

When building local servers, implement proper security measures:

- Input Validation: Sanitize all parameters to prevent path traversal or command injection

- Permission Scoping: Limit server access to specific directories or resources

- Error Handling: Provide clear error messages without exposing system internals

- Audit Logging: Record all operations for security review

Frequently Asked Questions

How do local MCP servers differ from cloud-based AI APIs?

Local MCP servers run entirely on your machine and process data on-device, while cloud APIs transmit data to external servers. Local servers provide superior privacy, lower latency (sub-millisecond vs. 200-500ms), and offline functionality. Cloud APIs offer greater computational power and easier scaling for resource-intensive tasks. The optimal approach often combines both: local servers for sensitive operations and cloud services for heavy computation.

Can I use local MCP servers with any AI assistant?

Local MCP servers work with any host application that implements the MCP client protocol. Currently, this includes Claude Desktop, certain AI-enhanced IDEs, and custom applications built with MCP SDKs. As the protocol gains adoption, more AI tools will add native support. You can also build your own host application using official MCP client libraries.

Do local MCP servers require programming knowledge to use?

Using pre-built servers requires minimal technical knowledge—typically just editing a JSON configuration file to specify the server path. Building custom servers requires programming skills in Python, TypeScript, or C#, but the official SDKs and documentation make the process accessible to developers with basic experience. The Awesome MCP Servers repository provides ready-to-use servers for common tasks.

What are the performance requirements for running local MCP servers?

Local MCP servers have minimal overhead since they're lightweight programs focused on specific tasks. Most servers consume less than 50MB of RAM and negligible CPU when idle. Performance requirements depend on the specific operations—a filesystem server needs fast disk I/O, while a database server benefits from adequate RAM for query caching. Any modern computer from the past 5-7 years can run multiple local servers simultaneously without performance degradation.

Are local MCP servers secure for enterprise use?

Local MCP servers provide strong security for enterprise environments because data never leaves the user's machine. All operations require explicit user approval through the host application. However, organizations should implement additional controls: restrict which servers employees can install, audit server source code for security vulnerabilities, and enforce permission policies through host application configuration. The on-device architecture inherently satisfies most data residency and privacy regulations.

Can local MCP servers work alongside cloud-based AI services?

Yes, local and cloud-based servers can work together seamlessly within the MCP architecture. A single AI assistant can use local servers for sensitive operations (accessing private files, querying local databases) while leveraging cloud servers for resource-intensive tasks (large-scale data processing, external API integrations). This hybrid approach combines the privacy and performance of local servers with the scalability of cloud infrastructure.

Conclusion: The Future of Privacy-First AI Integration

Local MCP servers represent a fundamental shift in how AI applications access and process user data. By keeping sensitive information on-device while enabling sophisticated AI capabilities, they solve the critical tension between functionality and privacy that has limited AI adoption in security-conscious environments.

The Model Context Protocol's standardized architecture ensures that as the ecosystem grows, developers can build once and integrate everywhere. Whether you're extending an existing AI assistant with custom tools or building entirely new applications, local MCP servers provide the foundation for secure, high-performance AI integration.

For developers and organizations prioritizing data privacy, offline functionality, and real-time performance, local MCP servers aren't just an option—they're essential infrastructure for the next generation of AI-powered tools.